That the outcome of an AI-powered system is systematically incorrect doesn’t make it useless.

The year 1980 marked my initial exposure to the concept of artificial intelligence.

Upon starting my role as a sales trainee at the Danish branch of Control Data Corporation (CDC), I was tasked with promoting (selling) the company’s mainframe computers, which were then the world’s most powerful. However, by the end of the two-year training period, circumstances had changed drastically, and something entirely unexpected had occurred.

CDC established its dominance in the supercomputer market after unveiling its System 6600 in 1963. The impact was so profound that IBM’s CEO, Thomas Watson Jr., promptly drafted an internal memo to his development head in response to the shock. Soon after, IBM began losing business to the Minnesota-based firm as customers needed the added computing capabilities only CDC could provide. As a result, orders flooded in for CDC’s supercomputers.

Because IBM was a significantly larger company, most clients would likely have preferred to transact with them. However, IBM did not have the product then, nor did they have anything in the pipeline that could compete with CDC’s machines.

So, to prevent customers from doing business with a competitor, IBM announced that it would soon launch a more powerful computer than CDC’s and that it would be compatible with its successful System/360 machines.

That worked!!

Customers started to postpone their purchase decision for additional computer capacity to see what IBM would offer. As a result, the order intake for Control Data thinned out.

But no machine came from IBM. The announcement was a FUD manoeuvre. Fear. Uncertainty. Doubt.

Therefore, Control Data sued IBM for unfair competition.

Long story short: IBM ended up offering a settlement, estimated to be worth 80 million USD (equivalent to 650 million USD or 4.5 billion DKK today), and CDC accepted it. IBM was not convicted of unfair competition. Instead, they chose to pay what today corresponds to USD 650 million to avoid a judge or jury having to pass sentence on the issue.

And now to artificial intelligence

As part of the proceedings, the court asked IBM to hand over internal documents that could clarify whether the company did reasonably believe that it would be able to complete the development of and ship a computer as advertised.

To make the work for Control Data’s lawyers more difficult and expensive, IBM delivered trucks full of documents. So enormous was the number of documents that Control Data didn’t stand a chance of paying for the lawyers’ review.

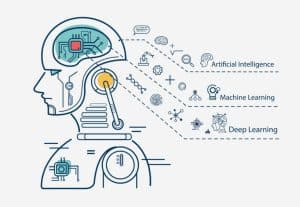

Instead, the nimble Minnesota company used OCR technology to scan the documents and store them digitally. Then, they developed a program (AI) that could find precisely the combination of phrases they needed while presenting their case. The combination of scanning, storing and searching enabled Control Data to make IBM nervous. The technology was called Artificial Intelligence because it could do something that otherwise required an army of people, working for years.

The technology was so successful that IBM’s settlement with Control Data was conditional on the database, with millions of documents being deleted. Deleting the database was a significant inconvenience for similar cases against IBM that were being prepared at the time. But what won’t you do for a few hundred million? The AI software became the product BASIS, which for many years was used for free text searching on CDC’s machines.

Research and copywriting

Then for the next forty years, I didn’t pay much attention to the subject.

AI got ample coverage in the media, but it was always about whether a computer could replace the human brain or if it could have feelings. Subjects I found uninteresting. I knew the answers. And they were boring.

I also occasionally read books on the subject.

But then I got the opportunity to become the editor and publisher of a book about AI and had to dive into the area again. When ChatGPT was launched, I started experimenting hands-on with the technology.

My work includes two activities that take a considerable amount of time: research and writing.

Like so many other authors, I have spent days at the Royal Library digging into old archives. And I have spent a zillion hours searching the internet from a browser. Research takes time, and much sludge must be removed before you find the gold nuggets.

Is ChatGPT the new fact-finding productivity tool?

Yes, it might be. In many cases, ChatGPT provides a faster response than a search that lists a series of references.

But the response to your query is not always correct.

So, if you are working on a topic that you only know little about, it can be challenging to determine whether the answer you get from ChatGPT or similar services is correct. How do you validate information on a subject where you have little or no domain expertise? It is a challenge that applies to all research and requires liaising with a subject matter expert.

Copy writing

My other main task is copywriting. As my native language is Danish, I need support for my English copy. So far, Grammarly has been a helpful coach.

ChatGPT, but also many other services, offer to rephrase shorter texts. And it works well. You can give the service a quick version of your text, and it suggests several alternatives. It’s not perfect, but it is often better than the starting point. You can use Grammarly to correct some of the errors ChatGPT makes; others you must fix manually.

Another approach is to write the copy in your native language and ask a service, such as Google Translate, to do the translation. Again, this is a good starting point. However, it requires much manual correction before it is acceptable. That’s why a human does the final proofing with English as her native language.

Artificial intelligence will always make mistakes

The title of this section might indicate that AI will always be wrong, but that is not the case. Sometimes it will be completely wrong; mostly, it will miss a bit, and in between, it might be spot on.

Where traditional IT is based on precise instructions, AI is based on instructions and data. Standard IT provides accurate results. AI gives uncertain results. How uncertain depends both on the algorithms and on the data it uses.

When I submit a payable invoice to my financial system, it is read by an AI-based program that suggests how it should be posted, including date, creditor information, due date, expense account, the amount and the VAT. The AI does most of the work, but I often need to make a few adjustments.

When I press “enter,” I am one hundred per cent sure that the correct creditor is selected, that the VAT amount is transferred to the account for outstanding sales tax, that the net amount is booked on the expense account I have chosen, and that it is ready for reconciliation with the payment from the bank. When I generate a profit and loss report or a balance sheet, I know with one hundred per cent certainty that all posted transactions are included.

The fact that the results of a system using AI are only sometimes correct does not mean that it cannot be valuable. Far from. It means you must know what you want to use it for and how to handle the uncertainty.

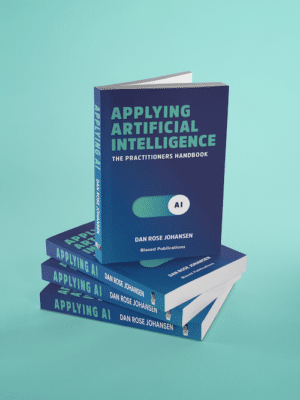

Dan Rose Johansen is the author of a book on artificial intelligence, which will be published this summer. I am the book’s editor and publisher. Our ambition is that the book should help organizations and companies use AI where it makes sense, within an acceptable budget and with a positive outcome.